OpenAI-Microsoft Friction Grows As ChatGPT App Growth Slows, Data Center Buildout Risks Overcapacity

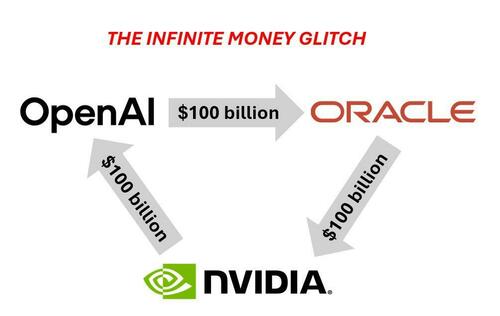

OpenAI’s aggressive expansion of datacenters and infrastructure investments – along with its massive pipeline of future projects, fueled by what we call a “circle jerk” in AI vendor financing – has prompted warnings from Microsoft executives that meeting all of Sam Altman’s infrastructure demands could generate overcapacity risks over data centers, according to The Information. Meanwhile, a separate TechCrunch report indicates that ChatGPT’s mobile app growth may have already peaked.

An OpenAI employee told The Information that the chatbot startup ($500 billion valuation) has budgeted approximately $450 billion in server expenses through 2030, with additional plans to rent servers from Microsoft and Oracle.

OpenAI has made requests for increased computing capacity with Microsoft, which has sparked internal friction between both companies. Microsoft retains “first dibs” on supplying OpenAI data center capacity due to its $13 billion investment; however, practical constraints such as construction limits and power market woes have slowed its ability to scale.

Microsoft executives, including CFO Amy Hood, cautioned against overbuilding servers that might not yield returns, while OpenAI CEO Altman pushed for faster expansion.

The Information continued:

There are usually two sides to most stories of marital friction. For OpenAI, its frustrations speak to the startup’s seemingly bottomless computing needs, which have multiplied by the month. Over the past year, OpenAI CEO Sam Altman frequently pressed Microsoft to move more quickly in adding capacity to meet those needs.

And for their part, Microsoft leaders told Altman the company simply couldn’t supply that capacity as fast as he wanted due to fundamental constraints in the construction process, such as connecting new data centers to power. Chief Financial Officer Amy Hood and her staff told colleagues that catering to OpenAI’s demands could put Microsoft at risk of overbuilding servers that might not produce a financial return, according to people involved in the discussions.

Eventually, the two companies came to a resolution. In the summer of 2024, Altman and Microsoft CEO Satya Nadella agreed it would be impossible for Microsoft to be the startup’s sole cloud provider given OpenAI’s recent growth, according to people who spoke to them. As a result, Microsoft began granting OpenAI waivers to strike deals with other cloud providers.

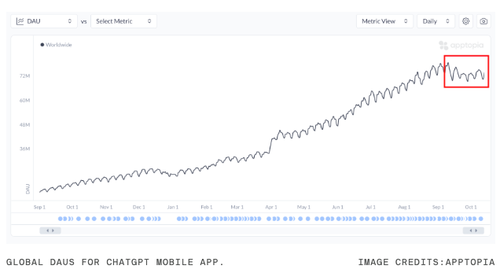

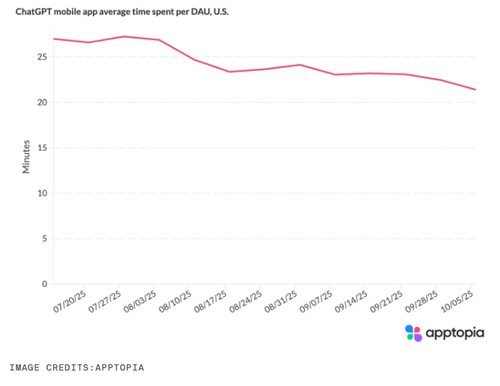

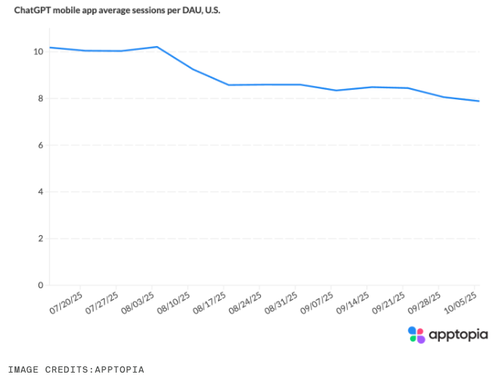

Hood’s overbuilding server risk comes around the time that new global daily active user (DAUs) data from third-party app intelligence firm Apptopia shows “ChatGPT’s mobile app growth may have hit its peak,” according to TechCrunch.

In the U.S…

And more evidence that ChatGPT’s hype is fading.

Fueling the data center bubble and breaking down how the giant “circle jerk” works, we exposed the infinite money glitch earlier this month.

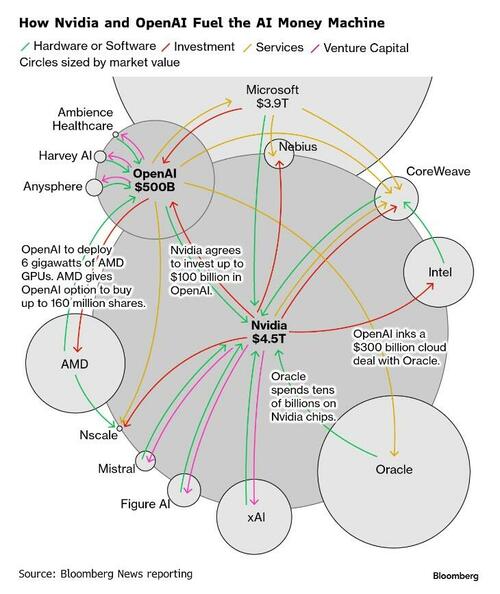

More complex via Bloomberg.

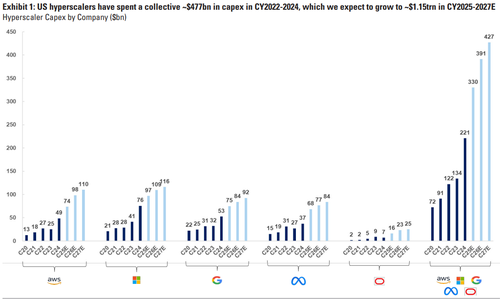

Super impressive Capex by hyperscalers.

And comes as:

- AI Is Now A Debt Bubble Too, Quietly Surpassing All Banks To Become The Largest Sector In The Market

While the Bank of England warned earlier this month that AI-related valuations are “stretched.” The irony of this warning is that central bankers very rarely make the right calls.

This story builds on:

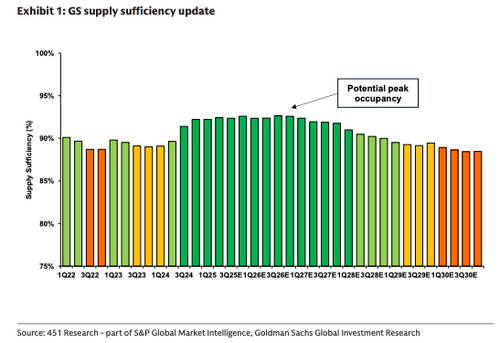

The bigger question is whether user fatigue with AI products is only now beginning to emerge. If that’s the case, Hood’s concerns about OpenAI’s aggressive expansion may be justified, as Goldman’s James Schneider told clients, “The net impact of our model updates extends the duration of peak datacenter occupancy well into 2026 (from the end of 2025 previously). After this point, we forecast a modest, but gradual loosening of supply/demand balance in 2027…”

Schneider added more color:

Reconciling our revised supply and demand updates, our baseline forecast for supply sufficiency stays largely unchanged in 2025 at 92% but increases by an average of 2% in 2026 to 92%, and 2% in 2027 to 92% – with a longer-term forecast supply sufficiency of 89% by 2030 – a 1% increase from our prior version of the supply/demand model. As a result, we now believe the peak of datacenter supply sufficiency is likely to be pushed out into 2026, from the end of 2025 as previously forecast. We believe the datacenter market’s current supply/demand tightness will extend for longer, and our model continues to suggest that market occupancy will stabilize around average levels seen over the past 18 months. In summary, we believe the outlook for datacenter supply, demand, and their implied supply sufficiency remains relatively healthy for now. We continue to watch for incremental datapoints that could cause a shift in expectations – and we are closely watching for any changes (GPU demand, AI model efficiencies, announced incremental supply additions such as Stargate) that could significantly impact medium-term supply/demand balance.

ZeroHedge Pro Subs can read the full global datacenter supply/demand report in the usual place.

Tyler Durden

Mon, 10/20/2025 – 11:50ZeroHedge NewsRead More

R1

R1

T1

T1