Nvidia Slides As Google Emerges As New Threat In AI-Chip Market

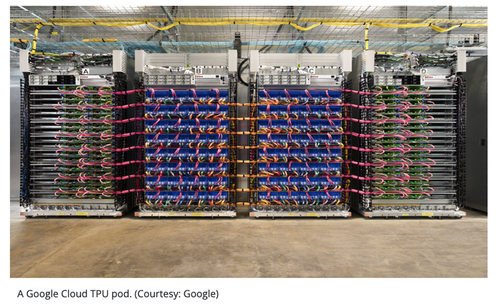

Alphabet shares jumped 4% in premarket trading after The Information reported that Meta is in talks to spend billions on Google’s tensor processing units (TPUs) for its data centers beginning in 2027, with plans to potentially rent TPU capacity from Google Cloud in the near term.

The report sent Nvidia shares down roughly 3.5% as investors weighed the possibility that Google could seize some of Nvidia’s market share. In other words, Google is gaining traction as a credible alternative to Nvidia’s GPUs (read here).

Also, SoftBank Group shares in Tokyo plunged as much as 11%, hitting a 2.5-month low on the news, as investors worry that Google’s newly released Gemini 3 model could intensify competitive pressure on OpenAI, one of SoftBank’s top investments.

“The stocks are hit by concerns that the competition environment of OpenAI will become tougher after Google’s Gemini 3 received strong reviews,” Mitsubishi UFJ eSmart Securities Co. analyst Tsutomu Yamada told clients.

Internally, Google Cloud executives forecast that TPU adoption could capture up to 10% of Nvidia’s annual revenue, amounting to tens of billions of dollars.

“One of the ways Google has attracted customers to use TPUs in Google Cloud is by pitching that they’re cheaper to use than pricey Nvidia chips. The high prices for Nvidia chips have made it difficult for other cloud providers like Oracle to generate solid gross profit margins from renting out Nvidia chips,” the report noted.

Google recently struck a deal to supply up to 1 million TPUs to Anthropic, further validating demand for TPUs.

After the Anthropic-Google deal was announced, Seaport analyst Jay Goldberg described it as a “really powerful validation” for TPUs. “A lot of people were already thinking about it, and a lot more people are probably thinking about it now.”

Here’s what Bloomberg Intelligence analysts are saying:

Meta’s likely use of Google’s TPUs, which are already used by Anthropic, shows third-party providers of large language models are likely to leverage Google as a secondary supplier of accelerator chips for inferencing in the near term. Meta’s capex of at least $100 billion for 2026 suggests it will spend at least $40-$50 billion on inferencing-chip capacity next year, we calculate. Consumption and backlog growth for Google Cloud might accelerate vs. other hyperscalers and neo-cloud peers due to demand from enterprise customers that want to consume TPUs and Gemini LLMs on Google Cloud.

The bottom line is that Meta’s potential shift toward Google TPUs only suggests a growing willingness among hyperscalers to diversify away from Nvidia.

Tyler Durden

Tue, 11/25/2025 – 06:55ZeroHedge NewsRead More

R1

R1

T1

T1