If you’re tired of censorship and dystopian threats against civil liberties, subscribe to Reclaim The Net.

YouTube CEO Neal Mohan, freshly anointed TIME’s 2025 CEO of the Year, recently sat down with the magazine to deliver a masterclass in corporate optimism.

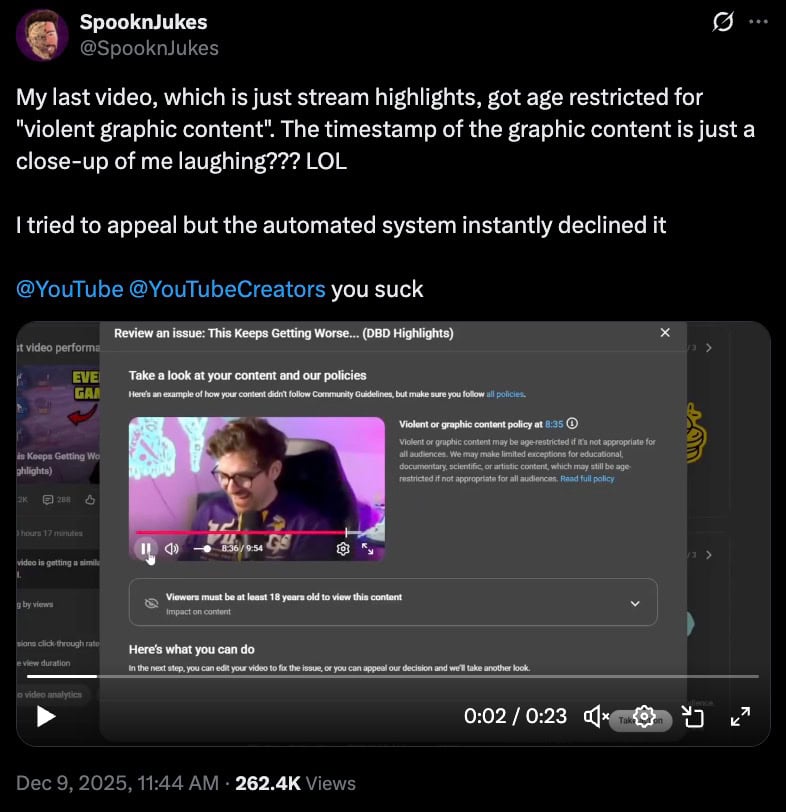

With the enthusiasm of someone who’s never had one of their mundane videos flagged for “graphic content” for laughing too hard, Mohan described his company’s “North Star” as “giving everyone a voice.”

Then, without even flinching, he explained how artificial intelligence will be supercharging YouTube’s already notorious censorship machine.

“AI will create an entirely new class of creators that today can’t do it because they don’t have the skills or they don’t have the equipment,” he said. “But the same rule will apply, which is, there will be good content and bad content, and it will be up to YouTube and our investment in technology and the algorithms to bring that to the fore.”

YouTube wants to fill the site with AI-generated content while simultaneously using other AI to judge what gets to stay up. The circle of platform life, run by code, shaped by code, cleaned up by more code.

And when it comes to removing creators entirely? That’s also part of the plan.

“AI will make our ability to detect and enforce on violative content better, more precise, and able to cope with scale,” Mohan claimed. “Every week, literally, the capabilities get better.”

Mohan is talking about an AI system that has already wrongly nuked creators across the platform, with YouTube downplaying or outright contradicting its role depending on which press release you’re reading.

For a company allegedly trying to “give everyone a voice,” it’s been spending a lot of time erasing them.

Mohan’s interviews read like a sci-fi script where a benevolent overlord insists the machines are here to help. Meanwhile, in the real world, YouTubers are fighting for the digital equivalent of habeas corpus.

Then there’s Enderman, a tech and malware-focused creator with over 350,000 subscribers. His channel was shut down after YouTube mistakenly tied him to another, unrelated channel that had been hit with copyright strikes.

Same story for gaming channel Scrachit Gaming and animation creator 4096. All three were linked by YouTube’s moderation system to a phantom menace of a channel and terminated.

After backlash, their channels were reinstated in November. YouTube insists these weren’t AI decisions. A spokesperson told Mashable that these terminations were “not determined by automated enforcement.”

But in November, YouTube quietly updated its “Content Moderation & Appeals” FAQ and admitted that it uses “both automation and humans to detect and terminate related channels.” So which is it? Human error or robot sabotage? They won’t say. They’re hoping no one notices.

Popular YouTuber MoistCr1TiKaL didn’t need a committee to reach a verdict on Mohan’s vision.

“We haven’t seen anything positive on YouTube as a result of these AI tools that Neal speaks so highly of. They’re a fucking scourge right now,” he said. “AI should never be able to be the judge, jury, and executioner…Neal seems to have a different vision in mind.”

He’s not wrong. YouTube’s AI hallucinated that a clip of horror gamer SpooknJukes laughing was “graphic content.” It demonetized the video, flagged it with an age restriction, and forced the creator to edit out the laughter to get monetization back. Yes, laughing.

In October, tech YouTubers Britec09 and CyberCPU Tech had their videos removed for showing how to install Windows 11 without a Microsoft account. YouTube said the tutorials were “harmful or dangerous.” The videos were restored a month later, quietly, without apology.

And for Pokémon creator SplashPlate? A “low-value” content strike wiped his channel off the map on December 9. It was reinstated the next day. Another hit-and-run enforcement from an AI system that’s “getting better every week,” according to Mohan.

Mohan can say YouTube is about “giving everyone a voice,” but the numbers tell a different story. Channels are being terminated by mistake. Appeals take days or weeks if they’re reviewed at all. Public outrage has become the only reliable method of tech support. Lose your account? Better hope you’re trending on X.

This isn’t some growing pain of a new moderation tool. It’s YouTube’s actual plan. Automate content creation, automate content policing, and automate user removal. The same platform that launched careers by letting amateurs speak freely is now making sure machines decide who gets to stay.

And if you’re one of the unlucky few targeted by that system? Don’t worry. YouTube’s “North Star” is still to give you a voice. You’ll just need to make sure your voice sounds algorithmically compliant, inoffensive to bots, and indistinguishable from the kind of “new creators” YouTube’s AI will be manufacturing in bulk.

AI is here to help. Just ask the people who it hasn’t flagged yet.

If you’re tired of censorship and dystopian threats against civil liberties, subscribe to Reclaim The Net.

The post AI Is YouTube’s New Gatekeeper appeared first on Reclaim The Net.

Reclaim The Net

T1

T1