“Botched Surgeries And Misidentified Body Parts”: AI Is Off To An Ugly Start In The Operating Room

Artificial intelligence is spreading quickly through modern healthcare, promising to make medical treatment faster, more accurate, and more personalized. But as hospitals and manufacturers adopt the technology, safety records, lawsuits, and regulatory struggles suggest that the transition has not been smooth, a new investigation by Reuters shows.

One example involves Acclarent, a subsidiary of Johnson & Johnson, which added machine-learning software to its TruDi Navigation System in 2021. The company described the update as “a leap forward,” saying it would help ear, nose, and throat surgeons better guide their instruments during sinus procedures.

Before the AI upgrade, the device had generated only a handful of malfunction reports. In the years that followed, however, federal regulators received more than 100 reports involving technical failures or patient injuries. At least 10 patients were reported harmed between late 2021 and 2025, many in cases where the system allegedly gave incorrect information about where surgical tools were located inside the skull.

Some of these incidents were severe. Reports described leaking spinal fluid, punctured skull bases, and strokes caused by damaged arteries. Several patients filed lawsuits, arguing that the device “was arguably safer before integrating” artificial intelligence. Manufacturers and distributors rejected those claims, insisting there is “no credible evidence” linking the AI software to the injuries.

Two Texas cases illustrate how these disputes have played out. In 2022, Erin Ralph suffered a stroke after sinus surgery in which her surgeon relied on TruDi. Her lawsuit claims the system “misled and misdirected” the doctor, who “had no idea he was anywhere near the carotid artery.” A year later, another patient, Donna Fernihough, experienced a similar injury. Her complaint alleges that Acclarent rushed the technology to market and accepted “only 80% accuracy” for some features.

Both cases remain in court, and the company has denied wrongdoing. Court records also show that one surgeon involved had financial ties to Acclarent, though the firm and the doctor’s representatives say those payments were unrelated to patient outcomes.

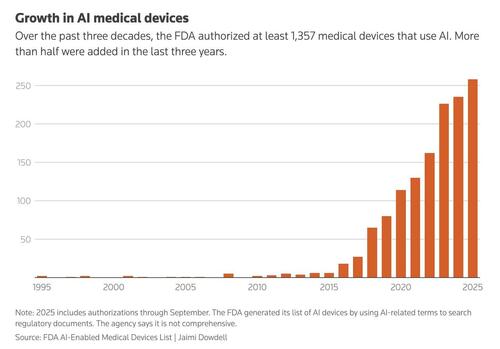

The Reuters piece notes that concerns about TruDi are part of a broader pattern. By 2025, the FDA had authorized more than 1,300 medical devices that use artificial intelligence, roughly twice as many as just a few years earlier. A review by researchers found that many of these products were later recalled, often within a year of approval. The recall rate for AI-based devices was about double that of similar technologies without machine learning.

Federal safety databases contain hundreds of reports involving these products. Some describe prenatal ultrasound software that “wrongly labels fetal structures,” while others involve heart monitors that allegedly failed to detect abnormal rhythms. Manufacturers have said most of these incidents did not lead to patient harm and were sometimes caused by user error or data-display problems.

Regulators warn that such reports are incomplete and cannot prove that a device caused an injury. Still, former FDA employees say the volume of AI products has strained the agency’s ability to monitor risks. Staffing cuts and recruitment difficulties have reduced the number of specialists available to evaluate complex algorithms. As one former reviewer put it, “If you don’t have the resources, things are more likely to be missed.”

Unlike pharmaceutical drugs, most medical devices are not required to undergo large clinical trials before reaching patients. Companies can often secure approval by showing that a new product resembles an older one, even if the update includes artificial intelligence. Critics argue that this system was designed for simpler technologies and may not adequately address the uncertainties introduced by machine learning.

“I think the FDA’s traditional approach to regulating medical devices is not up to the task,” said Dr. Alexander Everhart. “We’re relying on manufacturers to do a good job… I don’t know what’s in place at the FDA represents meaningful guardrails.”

At the same time, AI is moving beyond hardware into everyday medical practice. Doctors increasingly use automated tools to draft notes and manage records, while patients turn to chatbots for health advice. Physicians say these systems can save time, but they also create new risks when people rely on them instead of professional guidance.

Supporters of medical AI argue that the technology will eventually lead to better diagnoses, safer surgeries, and faster drug discovery. Critics counter that the pace of adoption has outstripped oversight.

Taken together, safety reports, legal disputes, and regulatory challenges suggest that artificial intelligence is reshaping medicine faster than institutions can adapt. While the technology offers significant potential benefits, recent experience shows that errors, oversight gaps, and unanswered questions remain part of its rapid expansion.

You can read the full piece by Reuters here.

Tyler Durden

Tue, 02/10/2026 – 18:00ZeroHedge NewsRead More

R1

R1

T1

T1